EU AI Act compliance: why ‘high risk’ doesn’t always mean high cost

Simon Montgomery, Chief Operating Officer

The European Artificial Intelligence Act (EU AI Act) is sending ripples through boardrooms across Europe. For businesses that rely on biometric technology, the phrase “high risk” has triggered particular alarm.

At a recent meeting, one organisation using ID-Pal’s identity verification solution, updated us on their plans to comply with the EU AI Act. Their worry? That the safeguards and governance required for EU AI Act compliance could cost millions to implement, with a looming deadline of August 2026.

And given that non-compliance carries penalties of up to €35 million or 7% of global annual turnover, the anxiety was understandable.

But here’s the catch: their fears were misplaced.

What is the European Artificial Intelligence Act?

The EU Artificial Intelligence Act classifies AI systems by risk level, based on their potential to impact people’s fundamental rights and safety; risk levels ranging from unacceptable (prohibited), high, low or no risk.

The EU AI Act includes specific regulations regarding biometrics, which are crucial for ensuring ethical use of AI technologies.

Categories for biometrics in the EU AI Act

Unacceptable risk (prohibited)

Real-time remote biometric recognition in public spaces by law enforcement is banned because of the clear threat to citizens’ rights posed by such surveillance, unless under strictly defined exceptions (e.g., to prevent terrorism or locate missing persons).

By February 2025, prohibited AI systems must cease.

High risk

Remote biometric recognition systems used in border control, critical infrastructure, such as transport and energy, or used to evaluate eligibility for essential public or private services, such as healthcare or credit, are categorised as ‘high risk’. These systems are not prohibited, but demand strict compliance, risk assessment and human oversight to be in place.

By August 2026, high-risk AI systems must be fully compliant.

Limited or minimal risk

Biometric recognition systems used for authentication, such as face recognition to unlock a phone, represent a low risk requiring basic transparency obligations. Systems that are accessed offline and in-person with the active participation of an individual are deemed to present no risk and are largely unregulated.

By 2 August 2027, all systems, without exception, must meet the obligations of the EU AI Act.

Each category comes with mandatory compliance deadlines, ranging from February 2025 for prohibited systems to August 2026 for high-risk systems, and August 2027 when all systems must meet baseline obligations.

On paper, biometric recognition looks like a high-risk use case. But there’s a critical EU AI Act biometric clause that changes that story and saves organisations millions of euros.

What the European Artificial Intelligence Act actually states

What many companies and indeed many other providers are failing to do is read the Act in its entirety. We took it upon ourselves to do this and have a supplementary assessment to back up our findings. As an AI-powered solution, we needed to be on top of every requirement of the Act to properly comply and also to advise our customers.

Annex III: the overlooked EU AI Act biometric clause

Buried in Annex III of the legislation is an important EU AI Act compliance clause:

“This shall not include AI systems intended to be used for biometric verification, the sole purpose of which is to confirm that a specific natural person is the person he or she claims to be.”

The ‘biometric verification’ listed in this clause refers to the process of confirming a person’s identity by reference to physical characteristics – face matching at customer onboarding, for example. This process is not automatically categorised as “high risk” as referred to in Article 6 (2) and therefore does not require full compliance by August 2026.

The EU AI Act treats remote biometric identification as high-risk, but Annex III explicitly excludes AI used solely for biometric verification to confirm that someone is who they claim to be.

It’s a distinction many businesses miss, but it can save organisations millions in costly compliance programmes.

What this means for organisations using biometrics in customer onboarding

If your company uses biometric recognition to determine access to essential services such as healthcare, education, employment or financial services, you may fall under the high-risk rules.

But if you use biometrics for verification for “the sole purpose of which is to confirm that a specific natural person is the person he or she claims to be”, the Annex III clause exempts you from the high-risk compliance track.

This is the nuance that businesses need to understand: not every biometric solution is a high-risk AI system.

Staying ahead of the European Artificial Intelligence Act

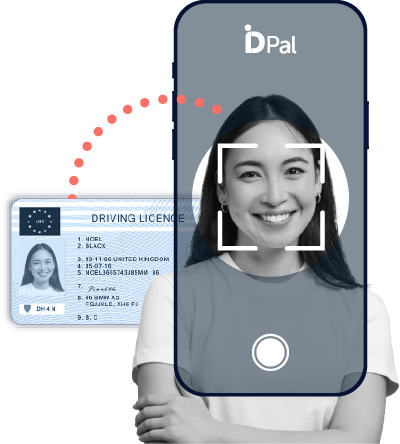

At ID-Pal, our AI-powered facial matching technology runs a 50-point biometric comparison between a live face capture and the image on an identity document and maps in real-time the biometric features across facial images presented to confirm if they are of the same person.

As a NIST- and iBeta-certified provider, we partner with organisations across Europe and beyond to ensure compliance with evolving regulations. Our team continually monitors laws and upcoming regulation like the European Artificial Intelligence Act to keep our customers informed and prepared.

Why ID-Pal keeps you ahead of EU AI Act compliance

The EU AI Act biometric clause in Annex III is not common knowledge. Many businesses, and even many biometric verification vendors, are still bracing for high-risk compliance costs they may never face.

At ID-Pal, we’ve built our solution by using the best AI has to offer to free compliance teams from the burden of manual checks. To empower teams with automation while never compromising on quality. Synthetics IDs and deepfakes generated by AI have changed the face of fraud. AI can spot what’s invisible to the human eye so we need to use this technology against fraudsters.

That’s why organisations globally trust us: because our AI-powered identity verification isn’t just fast, accurate, and fraud-resistant – it’s designed to keep you compliant without the unnecessary cost burden.

Other providers deliver IDV; only ID-Pal shows you how to navigate the hidden clauses of the EU AI Act so you avoid being trapped in the wrong compliance category.

If your business wants to stay compliant, cut fraud, and avoid the multimillion-euro costs of misclassified “high-risk” AI, speak to ID-Pal today.